Let’s get this out of the way early: if you feel like everyone suddenly knows more about “AI” than you do, you’re not behind. You’re just being marketed to.

Which, ironically, means that marketing still works.

Right now, “AI” has become one of those catch-all terms that sounds both impressive and vaguely threatening. It’s in decks, pitches, subject lines, and investor updates. And the unspoken message is always the same: use this, or risk becoming irrelevant.

Take a breath. You’re fine.

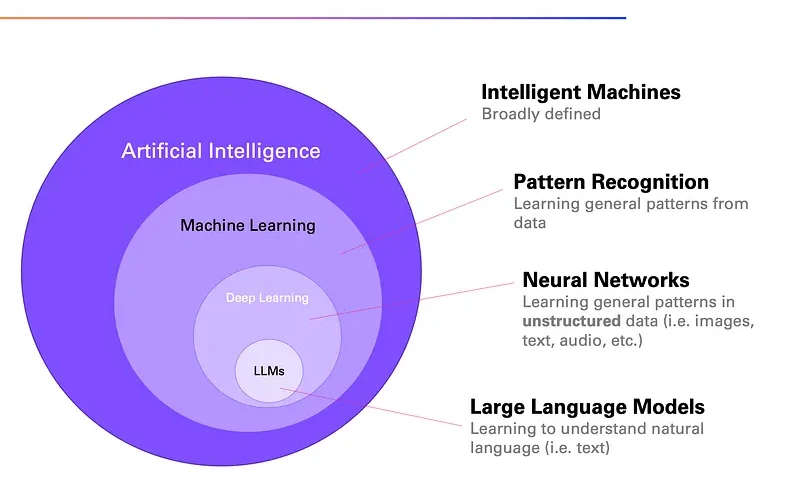

Generally speaking, what most people are actually talking about when they say “AI” is something much more specific — Large Language Models, or LLMs. And understanding what they are (and aren’t) is the difference between using them wisely and letting them drive the bus.

So, What is an LLM, Really?

The field of Artificial Intelligence in layers.

It’s sparkly-autocomplete. Heavy on the sparkle. ✨

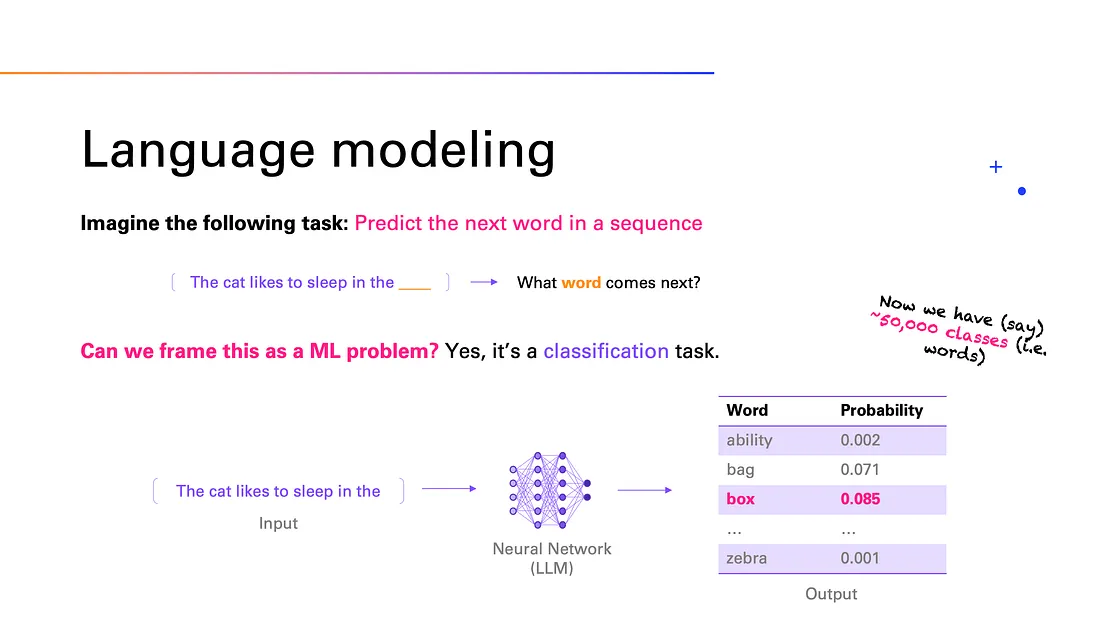

At a very high level, an LLM is a system trained on massive amounts of text to predict what word comes next in a sentence.

That’s it.

With this in mind, we can see that there is no consciousness. No strategy. No intelligence.

And certainly no understanding of your business, your customer, or your media mix.

Language modeling is learning to predict the next word.

They’re notably excellent at:

- Summarizing information

- Generating first drafts

- Rewriting content in different tones

- Answering well-structured questions based on existing knowledge

However, they are not thinking. They are pattern-matching at scale.

This is pattern matching and language acquisition, and is strongly tied to a word’s frequency in the training text. Because of that, the outputs can be biased.

As much as LLMs sound confident in their outputs, it’s important to know that it’s intentional. These tools sound certain because they’re trained to sound certain.

That’s not intelligence. That’s formatting.

Why This Matters for Marketers

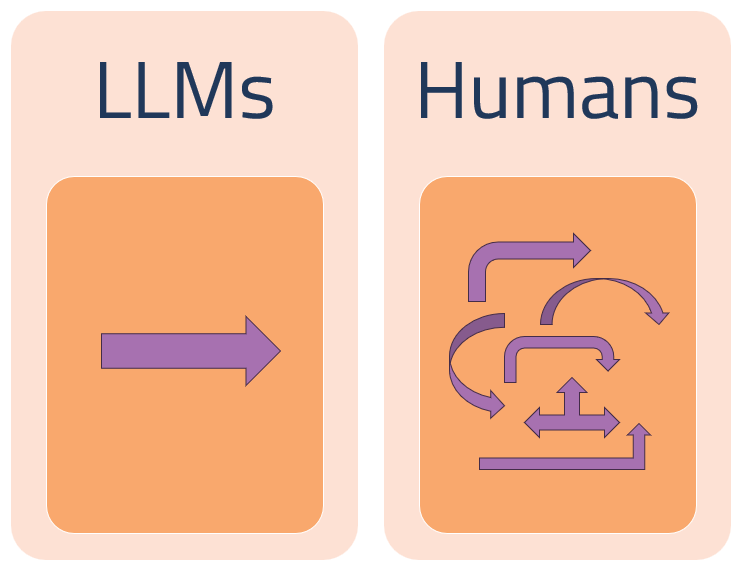

The current narrative suggests LLMs can replace decision-making. That they can plan, optimize, and strategize media better than humans ever could. And that’s where things start to wobble.

LLMs recognize patterns.

Humans recognize meaning.

It’s important to realize that media strategy isn’t just about assembling inputs and producing outputs. It’s about judgment. Trade-offs. Context. Knowing when not to do something.

A model can tell you what has worked historically. It cannot tell you whether it makes sense now, in this market, for this client, with these constraints, and this audience mindset.

Which, inconveniently, is the entire job.

And that gap matters. Luckily, current science is working to prove it.

What Happens When We Let LLMs Decide?

Claims about LLMs often suggest they can reason, optimize, and make decisions the way humans do. As a result, researchers are starting to test that assumption.

A 2025 study published in iScience compared how humans and large language models navigate a shared decision-making scenario: a daily commute where individual choices affect group outcomes, like congestion.

The results were telling.

LLMs struggled in four key areas:

- Group dynamics:

LLMs stick with their choice. Humans are flexible and adapt.

LLMs didn’t anticipate how others might behave. Didn’t adapt their decisions to improve outcomes for everyone.

- Strategic flexibility:

LLMs tended to stick with their original choice, even when it made things worse. Humans often choose to go against the crowd to reduce congestion. - Real-world intuition:

Humans draw on the lived experience of frustration, delay, and trial-and-error learning. LLMs only know what exists in text, with no practical experience. - Behavioral diversity:LLMs behaved too similarly. Either overly conservative or very erratic — leading to inefficient outcomes. A mix of cautious and bold behaviors are needed for best results.

To be fair, this isn’t a flaw. It’s just what happens when you ask a spreadsheet to drive a car.

Technically impressive, but emotionally terrifying.

In short, LLMs can simulate decisions, but they don’t understand the consequences of those decisions in context. That distinction matters in media strategy, where outcomes depend on nuance, trade-offs, and imperfect human behavior.

The Part LLMs Can’t Do

Good strategists evaluate context, question assumptions, and recognize when a tactic looks impressive but doesn’t pass the sniff test. That’s critical thinking — and it’s where generative tools fall short.

Mediologists are curious.

Critical thinking involves:

- Curiosity: Asks questions and seeks deeper understanding

- Research skills: Finds and evaluates reliable, credible information

- Pattern recognition: Connects ideas and spots trends across information

- Bias identification: Recognizes personal and external biases and stays objective

LLMs can support parts of this process. They can surface patterns and retrieve information quickly. What they can’t do is decide what matters, what’s missing, or what’s risky.

They aren’t curious unless prompted.

They don’t understand credibility beyond statistical likelihood.

And they can’t recognize bias — even when they sound perfectly neutral.

That’s the risk. Not that models are wrong, but that they’re convincing.

Strategy requires skepticism. It requires lived experience, intuition, and accountability — things that don’t exist in training data.

LLMs can accelerate thinking. They can’t replace it.

What LLMs Are Good For (And Where We Use Them)

Fastest in the land – cheetah or LLM?

Used properly, LLMs can be genuinely helpful. They’re great accelerators:

- Speeding up research summaries

- Stress-testing messaging ideas

- Helping teams get unstuck on a blank page

They can support the work. They just can’t own it.

They can speed read the internet. Helpful? Absolutely. Ready to run your media plan? Not quite.

Here are three behaviours marketers can adopt to use LLMs better:

- Ask better questions of tools

- Separate speed from judgment

- Treat LLM outputs as inputs, not answers

Predicting The Future of LLMs

Despite the panic, the biggest risk right now isn’t that brands aren’t using AI enough. It’s that they’re outsourcing thinking because the tool sounds confident.

Despite the panic, the biggest risk right now isn’t that brands aren’t using AI enough. It’s that they’re outsourcing thinking because the tool sounds confident.

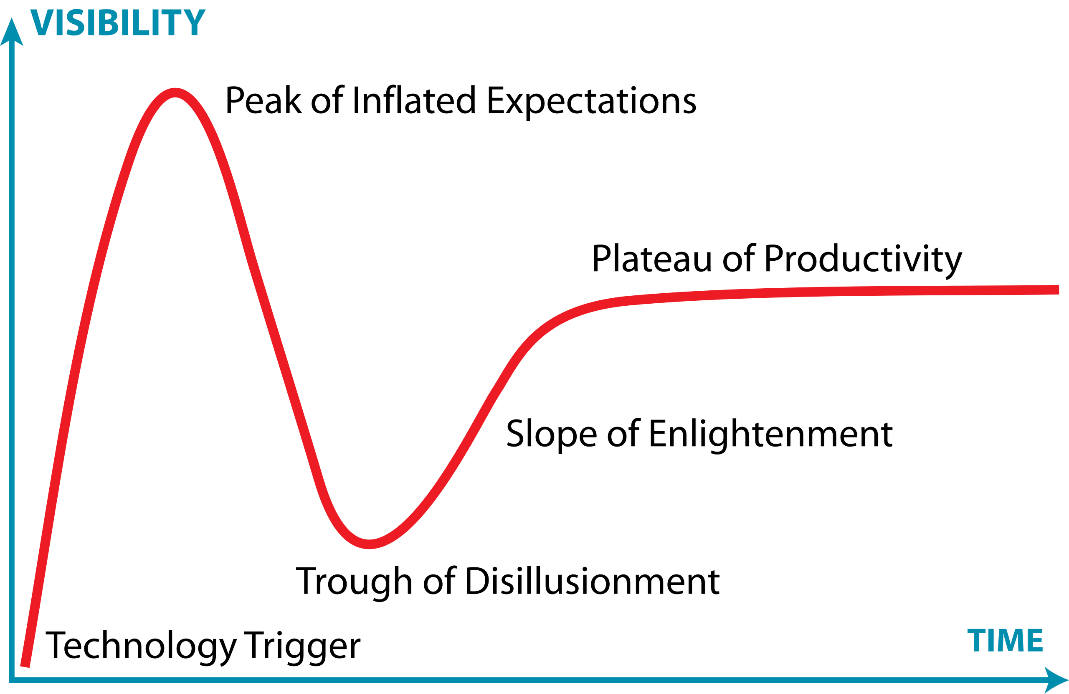

We’ve seen this song and dance before in digital advertising. The dotcom fever from the late 90s, had a huge bubble burst in the early 2000s after reality set in. The pandemic excitement around NFTs in 2021, followed by a market re-set in 2023.

Gartner Hype Cycle Phases

After all, it’s a cycle. It’s visualized as the Gartner Hype Cycle. It’s a framework that shows the maturity, adoption, and social application of specific technologies.

Like other graphic representations of understanding complex ideas, it’s not perfect. Neither is the funnel, and we still won’t stop using that.

It’s appealing because we recognize an element of truth in it. New technology gets wrapped in excitement. It promises precision, scale, and certainty. And only later do we stop and ask whether it delivered outcomes — or just some flashy distractions.

Every hype cycle promises transformation. Few promise a learning curve.

If the ‘AI’ craze were to fit in this cycle, we’d be in the ‘inflated expectations’ stage. Marketers and businesses with pie-in-the-sky dreams right now need to buckle up for harsh reality.

Or, we can temper our expectations early, and look ahead to what is actually productive.

Calm is a Competitive Advantage

Ultimately, the brands that win in moments like this aren’t the ones chasing every new capability. They’re the ones who understand what tools are for — and what they aren’t.

Ultimately, the brands that win in moments like this aren’t the ones chasing every new capability. They’re the ones who understand what tools are for — and what they aren’t.

LLMs aren’t something to fear. They’re a good tool to use where it makes sense. They can make good teams faster. They can’t make unclear strategies smarter.

And if you’re worried you’re falling behind because you’re asking questions instead of blindly adopting tools? That’s usually a good sign.

Tools will keep changing. Clear thinking, calm confidence, and human judgment still win.

This is why we think media strategy still belongs with people who are comfortable saying ‘not yet,’ or ‘not like this’.

If that sounds like the kind of partner you want in the room, we should talk.